Challenges:

One of the security services providers in the US faced challenges with their on-premise Oracle and Cloudera Hadoop environment, which led to excessive execution time for a query/code and higher costs of operation.

Objective:

The client wanted to build a Unified Data Warehouse on GCP that leverages cloud-native technologies, lowers operational costs and offers better risk mitigation than current on-prem based solutions. To facilitate this client also wanted to migrate the existing Data Warehouse functionality from On-premise Oracle and Cloudera Hadoop to GCP.

Also, another objective was to enable end-users to run SQL Queries on the Google BigQuery platform even prior to the actual application migration.

Solutions

- Datametica helped the client migrate their historical and Incremental load of On-prem Oracle and Cloudera Hadoop databases to the Google Cloud Platform.

- One time history load was done by Datametica in which the data was loaded to the GCS bucket from the source system and then the data was moved within the Google BigQuery.

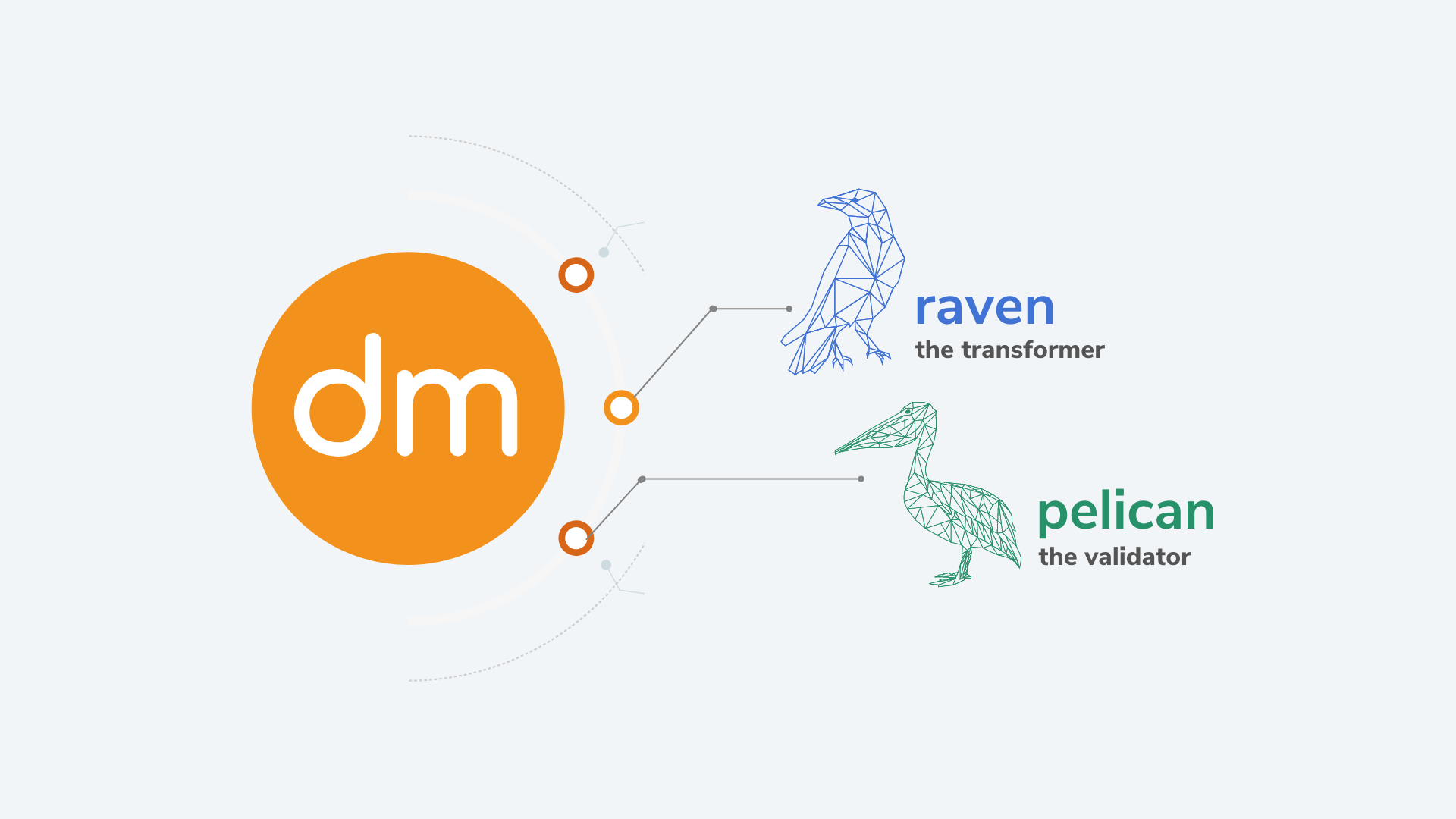

- Converted Oracle SQL code to Google BigQuery SQL code using Datametica’s Raven – Automated Workload Conversion Tool that automated the SQL conversion.

- Finally, the Google BigQuery data were compared using Datametica’s Pelican– The Automated Validation tool with source oracle data to ensure data integrity and quality assurance.

- Pelican Tool was also used to validate the data loaded into Google BigQuery by doing a field-level comparison with the data present in the on-prem Hadoop Platform.

- Implemented the existing Data Base model with optimal configuration of Partition & Cluster keys for cost optimization,

- Implemented best practices suited to the Google BigQuery platform for efficient and performance execution,

- Performed comparative analysis of execution times in Google BigQuery with on-prem Oracle execution times.

- Datametica established IAM roles, firewalls, VPC for GCP for the projects.

- Leveraged various Google Native tools like transfer appliance, Google Storage, Google BigQuery, Cloud DataProc, Cloud Composer, Stackdriver, Cloud Dataflow and etc to deliver successful complete Google Cloud implementation.

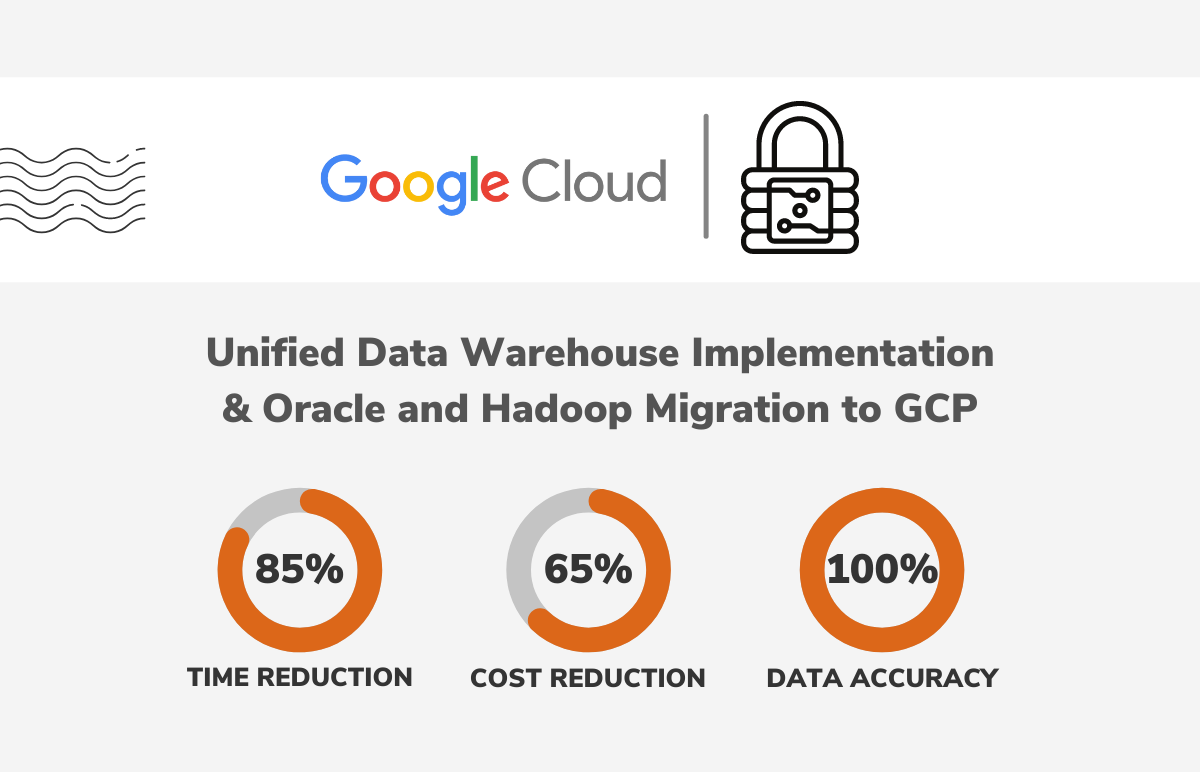

The Result

- Reduce scan cost by implementing partitioning and clustering

- Reduced the execution time by 85% that is the execution time was reduced to 8 minutes from 70 minutes earlier.

- Cloud Migration cost was reduced by 65%

- Ensured 100% data accuracy via Pelican

Datametica Products Used

GCP Products Used

Recommended for you

subscribe to our case study

let your data move seamlessly to cloud