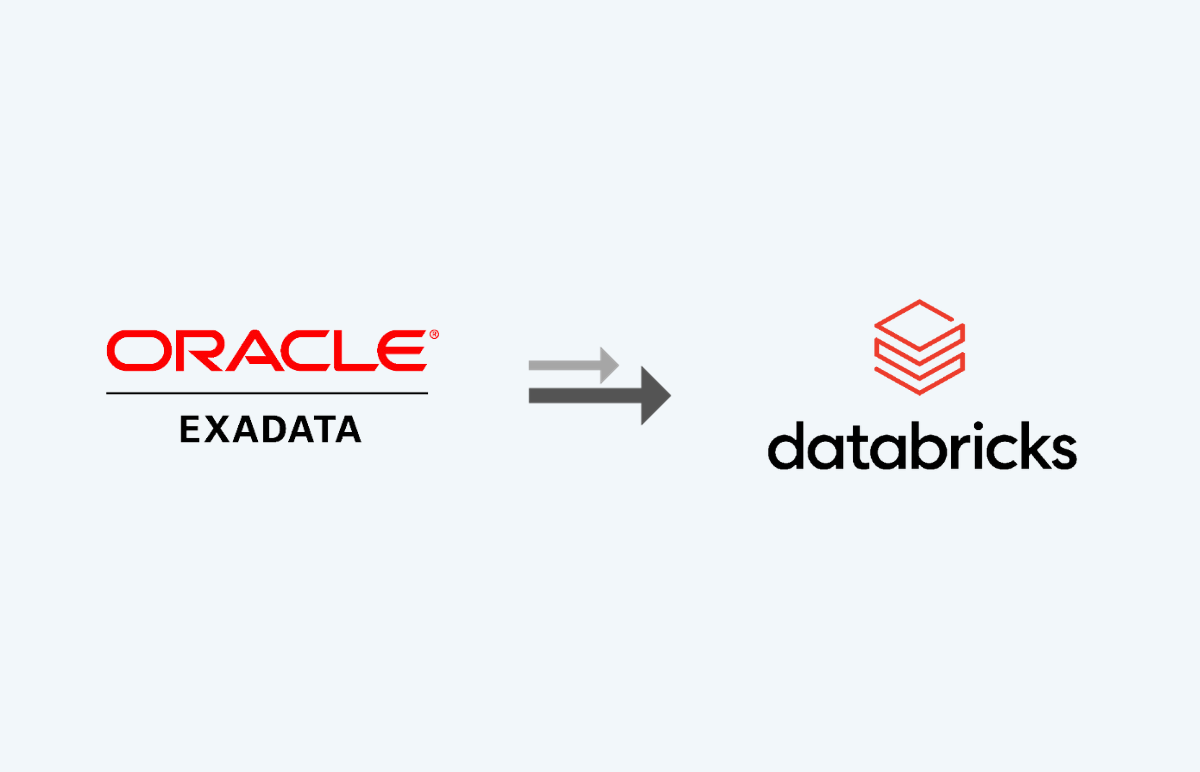

Challenges: The client wanted to migrate to a cost-effective platform and enhance performance

Our client, a US-based leading hospitality organization, wanted to migrate their existing Oracle Exadata Data Warehouse to a more robust and cost-effective platform, Databricks on Azure. To pave the way for this cloud migration, the client embarked on a crucial MVP (minimum viable product) phase for their core application. This phase was aimed at assessing feasibility, evaluating performance, and validating business requirements.

Solutions:

Datametica played a pivotal role in orchestrating a successful MVP for the cloud migration project:

- The Datametica team initiated the project by meticulously identifying and analyzing data sources within the Oracle Exadata environment.

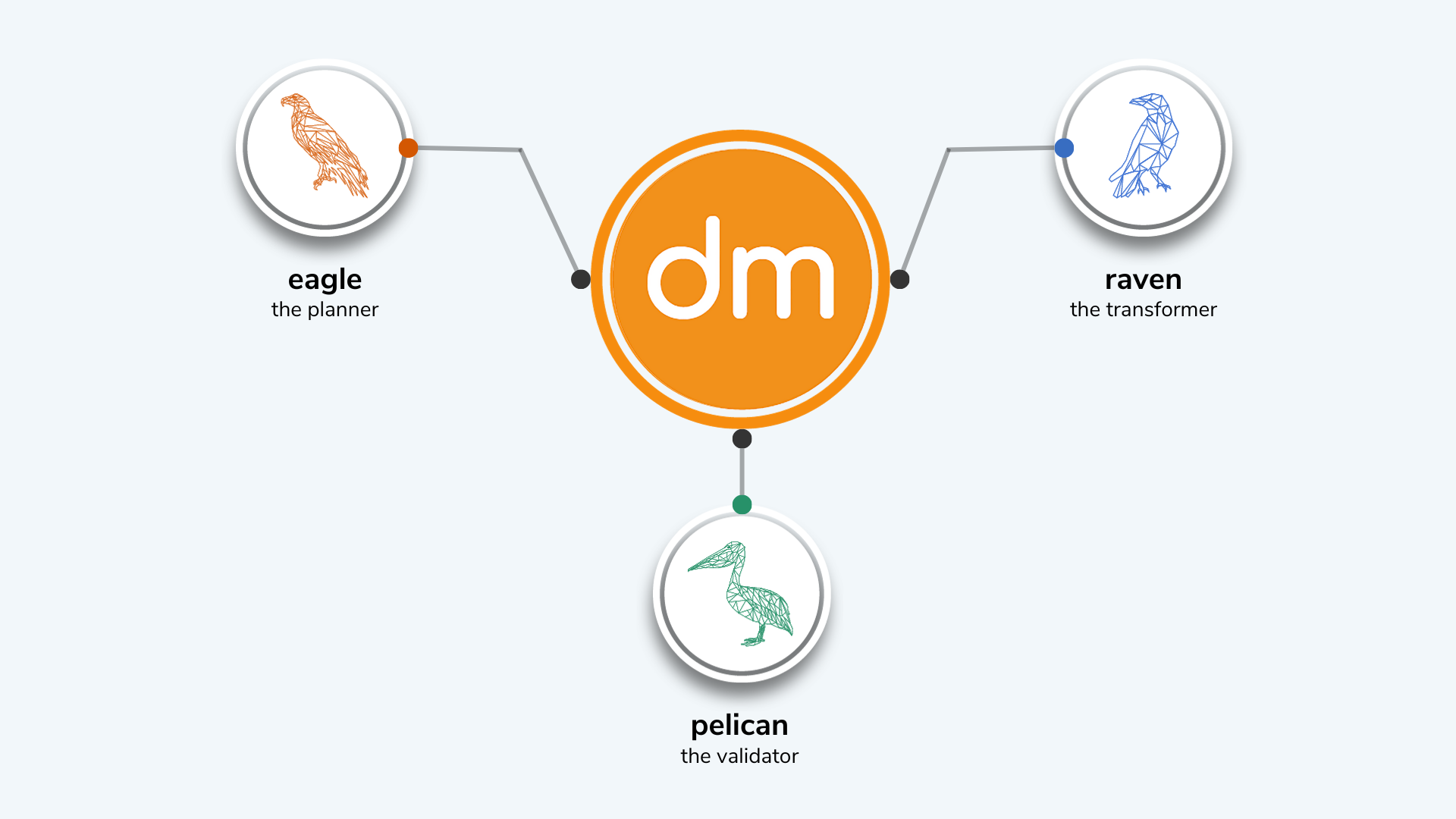

- Datametica’s Eagle – an automated data assessment and planning tool, helped in understanding the data pipelines, complexities, volumetrics, table structure, data lineage, and the overall design of the existing system.

- Eagle also helped in creating a detailed migration planning strategy with future state architecture and estimated migration cost.

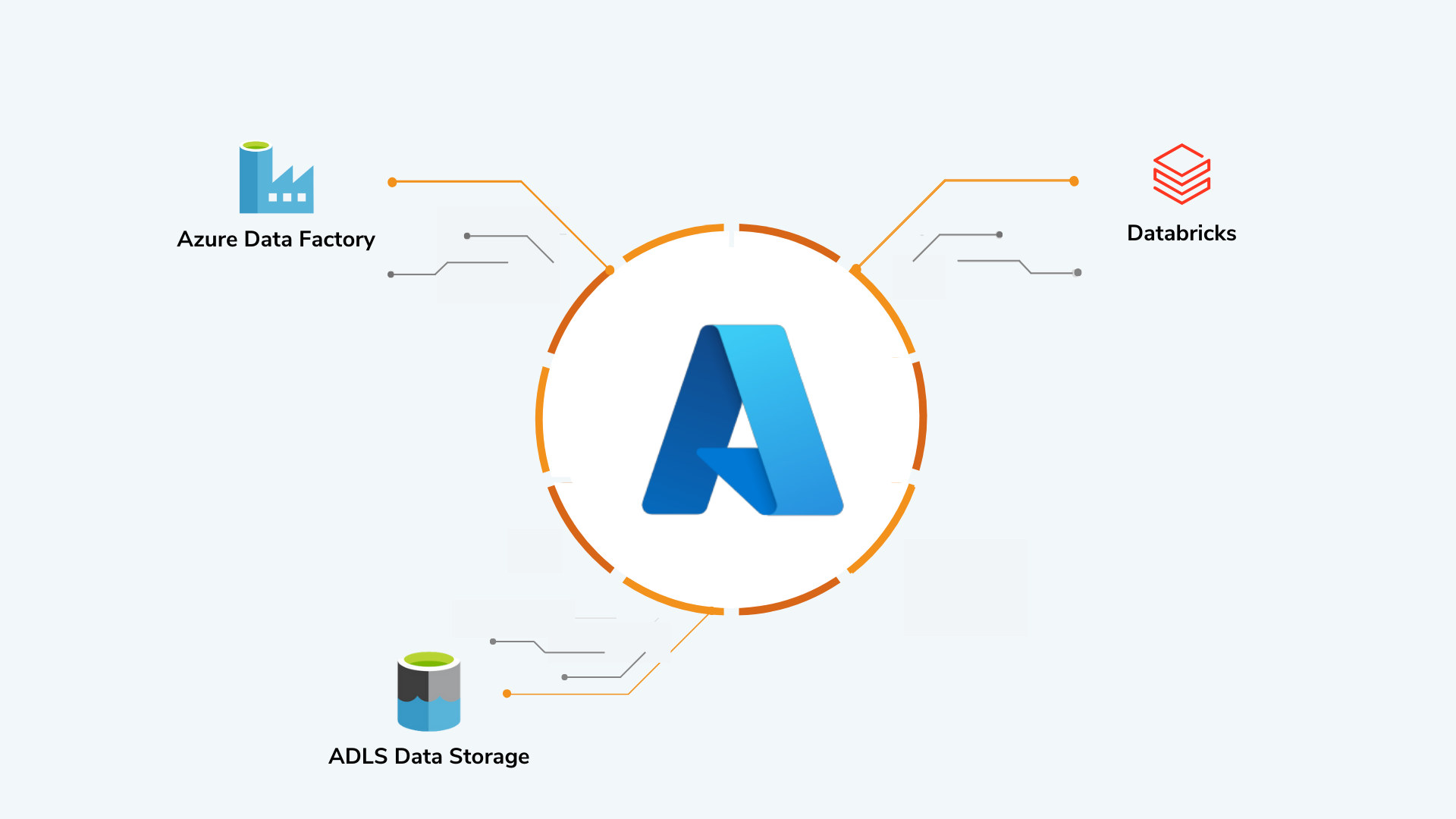

- A robust data migration pipeline was meticulously designed using Azure Data Factory (ADF). This pipeline was tailored to efficiently extract data from Oracle while implementing incremental data capture strategies to track changes in the source system.

- Datametica leveraged the automated code conversion service – Raven to automatically convert data, including tables, views, packages, PL SQLs, stored procedures, UDFs, Airflow jobs, stambia jobs, and other database objects, to ensure compatibility with Azure Databricks.

- Existing ETL jobs and SPs were rewritten or modified to leverage Databricks’ native functionalities, optimizing data processing.

- Datametica setup, orchestration, and scheduling for workloads converted by Raven using Autosys and Azure Data Factory. The scheduling of Azure Synapse pipelines was configured to match the existing schedule utilized in the production environment

- Data integrity, accuracy, and performance were rigorously validated through an AI-powered automated data validation tool – Pelican. Pelican’s cell-level validation ensured data integrity throughout the migration process.

- The solution also produced data extracts suitable for loading into the Druid data store, enabling advanced analytics and reporting capabilities.

Client Benefits: Reduced run time, cost estimation, accelerated MVO execution, and Azure native transformation

Datametica’s strategic migration approach not only met the objectives but also unlocked substantial performance improvements and cost efficiencies, steering our client towards a modernized and optimized data infrastructure.

- Achieved a remarkable 78% reduction in runtime, greatly boosting data processing speed and efficiency.

- Provided precise estimates of Azure consumption costs, enabling effective migration planning and budgeting.

- The end-to-end MVP was executed in a mere 6 weeks, significantly expediting the progress toward a complete migration.

- All code and objects were successfully converted to Azure native formats, ensuring seamless integration with Databricks on Azure.

Products Used

Datametica Products Used